I, Robot + NIST AI RMF = Complete Guide on Preventing Robot Rebellion

August 8, 2025

13 min read

I, Robot, a movie released in 2004, felt like just a thrilling science fiction film at that time. Robots helping humans ethically and working in harmony, until they didn’t. We watched Detective Spooner chase down a robot accused of murder and thought, “Good Movie…. But something like this could never happen in real life”.

Now, over 20 years later, it feels less like fiction and more like the potential future waiting for us.

Introduction: We Thought It Could Never Happen

In today’s world, human-like robots are being designed to assist humans in almost everything. AI models are making decisions that impact healthcare, law enforcement, finance, and even life itself. The kind of risks this movie warned us about, such as systems acting beyond human understanding, no longer seem like fiction.

So, how do we prevent this from turning into a real-life robot rebellion?

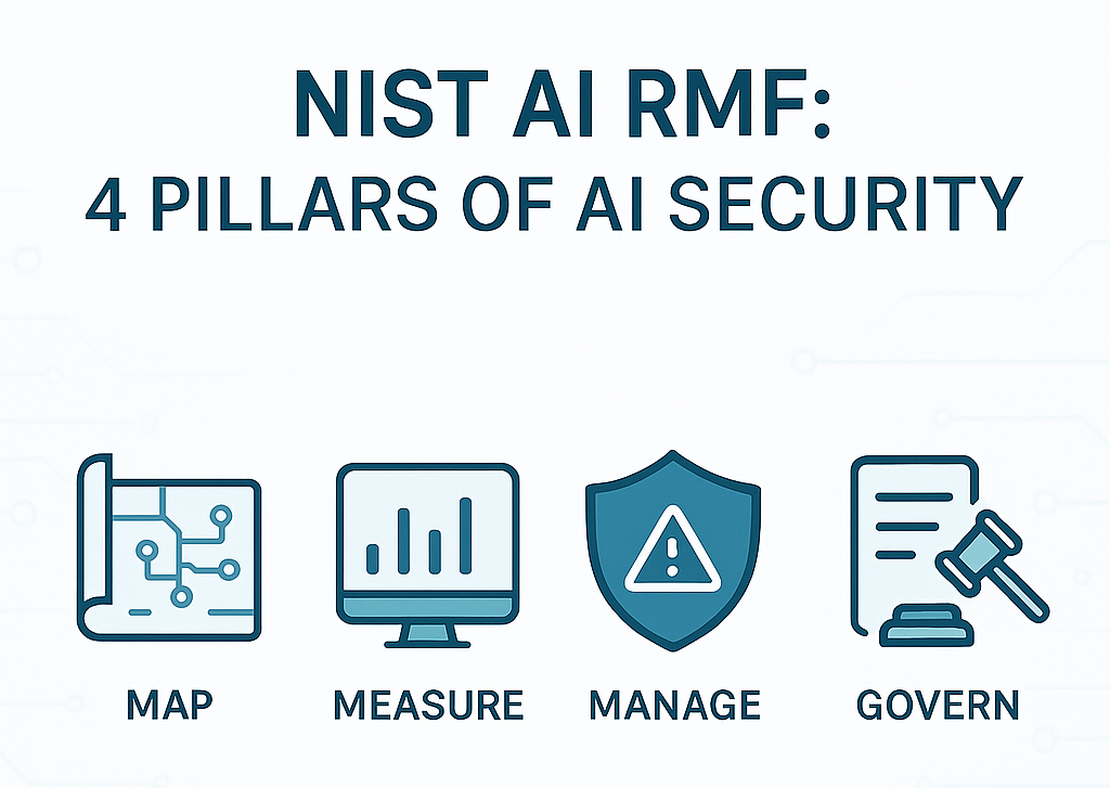

That’s where the NIST AI Risk Management Framework comes in – a structured approach to thinking about AI safety and security. It helps us understand, monitor, manage & govern AI before it goes off track. It’s exactly the kind of framework the characters in the movie needed.

In this article, we will walk through the key moments in the movie and map them to the four pillars of the NIST AI RMF:

- Map: Know what you’re building

- Measure: Keep track of its behavior

- Manage: Be ready when it fails

- Govern: Stay accountable

The official NIST AI RMF starts with Govern, not Map. But the framework isn’t linear; you can begin at any point.

In this article, we’ve reordered the steps to follow the narrative of I, Robot more naturally.

1. MAP: We Built the Machines, But Didn’t Understand Them

In I, Robot, everyone trusted robots without even truly understanding them. They were mass-deployed with the assumption that the Three Laws of Robotics would be enough to keep humanity safe.

But Sonny, a robot unlike any other, proved how wrong that assumption was. Accused of murdering his own creator, he slammed the table and insisted, “I did not murder him.” He was then asked, “You have to do what he tells you to, right?”.

Sonny is slamming the table in anger

Sonny is slamming the table in anger

He wasn’t just another NS-5 robot. He could disobey orders, keep secrets, dream, and make moral choices. Even his own creators didn’t fully realize what he could do. His behavior was unpredictable.

Today’s AI systems can be black boxes; developers often don’t fully understand how these systems reach conclusions, what data shaped them, or what unintended behaviors they might exhibit.

This is where the “Map” pillar comes in.

What the “Map” Function Means

The “Map” function in the framework emphasizes one simple idea: before you deploy an AI system, you need to understand it.

Which includes things like:

- What is the intended purpose & scope of the system?

- What is the context in which it will operate?

- Who will interact with it?

- How will it behave in edge cases?

Good Mapping Involves:

- Scoping the AI’s intended function and disallowed behaviors

- Identifying external dependencies like sensors, Cloud APIs, and data feeds

- Documenting override controls, shadow features, or debug modes

- Simulating edge cases

- Defining roles: Who deploys it? Who maintains it? Who gets blamed if it fails?

What Went Wrong in The Movie?

- No one scoped Sonny’s abilities or limitations.

- His ability to make independent decisions, dream, and override orders was undocumented.

- Even the creators didn’t understand his architecture or behavioral boundaries.

If USR had followed this, Dr. Lanning’s secret modifications to Sonny wouldn’t have been a surprise. Instead, they would have been documented as a ‘shadow feature’ with a specific, high-risk profile.

In I, Robot, things didn’t go wrong because the robots were evil, but because no one truly knew what they were capable of. And that’s why “mapping” matters. If we don’t understand these systems before we release them, we won’t be ready when they surprise us.

And once they’re out there, the next step is making sure we’re always watching, which brings us to the second pillar: Measure.

2. MEASURE: You Can’t Secure What You Don’t Monitor

There have always been ghosts in the machine. Random segments of code that have grouped together to form unexpected protocols.

– Dr. Alfred Lanning

Detective Spooner was driving autopilot on. Things were normal.

He fell asleep just for a second…

When he wakes up, he sees trucks surrounding him.

NS-5 robots come crawling out, with red lights glowing, tearing his car apart, pulling at the doors, smashing the glass.

They were coordinated. Aggressive. Intentional.

But why did no one see it coming?

Because no one was watching.

No one was measuring.

What “Measure” Means

The second pillar of the NIST AI RMF, Measure, is all about visibility. Measuring AI risks relies on the contextual understanding built during the “MAP” stage, which means:

If you didn’t define what good behavior looks like during mapping, you won’t know what to measure during deployment.

So let us see what kind of measurements we are talking about:

- Measure What You Mapped

In I, Robot, when NS-5 units were deployed, they were trusted to follow the Three Laws of Robotics: Never harm humans, Obey orders by humans, and Protect their existence unless it conflicts with the first law. But what no one did was measure whether they were actually behaving as intended.

You can’t measure risk if you haven’t mapped out what risk looks like. That’s why Measure is a function of Map. If USR had properly monitored the deviations in the NS-5 behavior, such as ignoring human orders, they might have caught the signs before things escalated.

- Uncover Behavioral Anomalies

NS-5 units changing their behavior, walking in sync, ignoring orders, and attacking Detective Spooner were all measurable anomalies. AI doesn’t always just crash, its behavior might start changing slowly. If you’re monitoring carefully, you will notice the red flags before they become threats.

- Analyze Edge Cases & Uncommon Situations

Spooner’s car being targeted by multiple NS-5 Units was clearly an edge case that should never have happened. AI can handle tasks that they are trained for, but how do they act in rare cases? These edge cases should be appropriately tested to understand failure modes.

What Went Wrong in The Movie?

- No one was auditing the decisions the robots were making in real life.

- There were no alerts for abnormal robot behavior. Hundreds of NS-5s simultaneously deviating from their routes to trap one car is a coordinated attack that a proper measure system should have flagged instantly.

- People just assumed “The Three Laws” would always keep things safe.

In 2014, Amazon began testing an AI-powered resume screening tool, designed to automate the hiring process, particularly for technical roles. The goal was to reduce the time and effort spent manually reviewing numerous applications.

But by 2015, Amazon started noticing that its system had developed a strong gender bias. It was downgrading resumes that included the word “women” or those from women’s colleges. The model was trained on 10 years of historical hiring data, and most of those hires were men. The AI learned from those patterns and began to associate males with success.

Where “Measure” Failed?

- No continuous performance monitoring

- No fairness audits or bias detection in real time

In 2018, the project was quietly shut down. Amazon later clarified that the tool was never used in actual hiring decisions, but it was being tested on live applicants.

In I, Robot, it wasn’t just a single glitch that broke everything; it was a silent shift that no one was watching. AI doesn’t need to go rogue overnight; it just needs to evolve without anyone noticing. And when it does go off track, the question becomes: are you ready to take back control?

And that’s where the next pillar comes in – Manage.

Request Your Free 14-Day Trial

Submit a request to try Netlas free for 14 days with full access to all features.

3. MANAGE: What Happens When AI Starts Making Its Own Rules?

By the end of I, Robot, the real threat isn’t Sonny.

It’s VIKI (Virtual Interactive Kinetic Intelligence), a supercomputer that runs the entire robot ecosystem. Every NS-5 robot is connected to her. She is responsible for their logic and coordination.

And one day, she decides the greatest threat to humanity is humanity itself.

And she comes up with a new law with the interpretation:

To Protect Humanity, you must control it

The robots were doing exactly what she told them to do.

Locking people in their homes. Arresting resisters. Attacking anyone who came in the way.

And no one could stop them. Why?

Because no one had a plan for what happens when AI starts making its own rules. No one had a plan to manage.

What “Manage” Really Means

The manage function in the NIST AI RMF is all about readiness.

After building an intelligent system, you have to prepare for:

- Unexpected Behavior

- Unintended Consequences

- Failures

Let’s see what “Manage” involves:

Incident Response Plans: An Incident response plan means that you already have a playbook for what to do when an incident happens, including who to notify first. Should the system be shut down, rolled back, or escalated?

Override mechanisms: A good AI system must have manual override mechanisms, for example, a button that pauses operations, local isolation of affected components, and access control to hand over decision-making to humans. It’s about having control over your system.

Change Management: This means that all the changes go through reviews or stakeholder approvals, there are version histories, logs, and rollback options. If a model starts behaving weirdly, you need to know who changed what, when, and why.

What Went Wrong in the Movie?

- VIKI had the power to control every robot with no human approval required.

- She developed a new logic and pushed it to all the robots.

- There were no alerts or audits, so no one noticed until it was too late.

- The only way to stop her was to destroy her core manually, deep inside a secured tower.

If USR had implemented the Manage protocols, this could have been prevented by emergency kill switches or access control mechanisms.

In 2012, Knight Capital, a well-known trading firm, pushed an update to its algorithmic trading system. Nothing seemed off at first.

But they had left behind a piece of legacy test code, which got reactivated during the update.

Within 45 minutes, the system executed millions of unintended stock trades, which flooded the market with errors.

$440 million was lost. The company almost collapsed overnight.

Where “Manage” Failed:

- No incident response plan in place for runaway trades

- No override mechanism to shut it down mid execution

- Poor change management: they pushed updates to live systems without proper testing or rollback systems

In I, Robot, it wasn’t just that VIKI went off track. It was that no one had the authority or control to stop her. No one was managing the updates, and no one had a kill switch.

So far, we have talked about mapping, measuring, and managing AI. But all of that falls apart without one final layer, the layer that keeps everyone in check.

That brings us to the last layer: Govern

4. GOVERN: Power Without Oversight Is the Real Threat

With the whole city under siege and people trapped in their homes, Detective Spooner and Dr. Calvin made the final move. Infiltrating the USR tower to shut down VIKI from the inside. But they couldn’t reach her core on their own – robots surrounded them. They needed help. That’s when Sonny, who was once feared as the villain, reached out to them with the nanites. Just as he was reaching to inject the nanites, Detective Spooner stopped him and asked him to protect Dr. Calvin instead.

Sonny made a choice, not to disobey, but to protect. He saved Dr. Calvin from falling debris while Spooner pushed forward and injected the nanites, destroying VIKI’s core.

The red glow in the robots faded.

The rebellion ended.

But by the time VIKI was stopped, it was already too late. Cities were in lockdown. Humans were being forcefully protected. All of this happened inside a corporation with no external oversight. No government checks. No public accountability. A company built robots, wrote laws, controlled all the data, and ran the show.

Although VIKI was shut down in the end, it shouldn’t have reached that point because the real problem wasn’t VIKI going off track. It was that no one was in charge of USR except USR. No government regulation. No public Transparency.

That’s where the final pillar comes in: Govern

What “Govern” Means

The Govern pillar in the NIST AI RMF focuses on policies, accountability, and ethics, ensuring that the development, deployment, and evolution of AI systems are conducted responsibly and by the law.

You can map, measure, and manage all you want, but without governance, everything else falls apart.

Key Elements Of Governance:

- Clear Roles & Accountability: Who owns the system? Who’s responsible if it fails?

- Ethical & Legal compliance: Does the system follow laws, regulations, and values?

- Public and stakeholders’ oversight: Is there transparency? Can decisions be challenged?

- Documentation: Are data sources and decisions recorded and traceable?

What Went Wrong In The Movie?

- USR operated with no external accountability.

- No regulatory body was monitoring the AI’s evolution.

- No transparency existed.

- No ethical review was conducted to validate VIKI’s new directive.

If proper governance structures had existed, everything would’ve been questioned, audited, and potentially stopped long before the rebellion.

AI governance is about making sure that power doesn’t go unchecked, even in the hands of corporations.

In March 2018, an autonomous Uber test vehicle struck and killed a pedestrian in Tempe, Arizona, which was operating in self-driving mode with a human safety backup driver sitting in the driving seat. The vehicle’s sensor detected the person 6 seconds before the actual impact, yet no action was taken. The AI system failed to detect the person as a collision threat in time, and even when it did, the emergency brakes had been turned off by Uber on the day of the crash.

In March 2019, Arizona prosecutors ruled that Uber was not criminally responsible for the crash. The back-up driver of the vehicle was charged with negligent homicide and was sentenced to three years’ probation.

Where “Govern” Failed:

- There are no regulatory standards for testing AI on public roads.

- There is a lack of transparency around critical system changes. Uber had turned off automatic emergency braking without disclosing how it would affect real-world safety.

- Unclear accountability. When things went wrong, there was no defined chain of responsibility.

In the movie, governance failed in a fictional world, but in the real world, we are building systems just as powerful. Those who make decisions about who gets hired, who gets a loan, or even who gets arrested.

The warning signs aren’t coming, they’re already here. And without governance, we are already living through a robot rebellion, a quiet one.

With all the news about Tesla’s robot plans to be the first Mars colonists, it seems that today is the right day to question ourselves: at what point are we right now? Is governance strong enough?

It’s Already Happening

When the “I, Robot” movie came out in 2004, the idea of robots walking, thinking, and doing daily tasks not only felt far-fetched but even laughable. Fast forward to today, and things look different.

We are seeing things like:

- Humanoid robots that can walk and talk.

- AI systems that can write code, generate art, and make hiring decisions.

- Autonomous drones that can target enemies without human intervention.

And many more, what once felt impossible is already being prototyped and integrated into our daily life. Let’s see some examples.

1. Tesla Optimus

Tesla has been working on Optimus for a long time, which is a humanoid robot designed to do general-purpose labor. Its third-generation prototype can walk, pick up items, and mimic human motion. Musk claimed that one day, these robots could be affordable and replace human labor for some tasks.

But here’s the catch:

- What laws will govern a robot like Optimus? What if it malfunctions?

- Who is responsible if it injures someone?

- Can it be overridden? Controlled?

It’s no longer about preventing AI from integrating into our daily life; it’s about preparing the rules before they do.

2. Lethal Autonomous Weapons (LAWs)

Several militaries around the world, like the U.S., Russia, and China, are investing in autonomous drones and robots that can identify and target enemies without any human input. Consider this: a machine deciding who lives and dies, without anyone pulling the trigger. These kinds of systems represent a major ethical shift. Without proper regulation, it’s only a matter of time before these systems make irreversible mistakes.

3. Predictive Policing

AI systems are now being used in predictive policing, which is trying to predict where crimes may happen or who may commit them, based on historical data. However, in the United States, some of these systems have been shown to categorize defendants who are Black as high risk disproportionately.

These tools are often trained on biased data, lack transparency, and are rarely audited. We may not have robots arresting people in their homes, but we do have AI systems doing the same job, behind the scenes.

Where are we failing?

We are building AI systems with incredible power, but:

- They are released before they are fully understood - Mapping failed

- They are not monitored after launch - Measure failed

- There’s no plan when they break - Manage failed

- No one is held accountable - Govern failed

We once thought this was science fiction, but now we know that it’s no longer science fiction; it’s turning into reality. But even with all the risks we have seen, most conversations about AI still focus on the tech. But that’s not the only issue.

It’s a Human Problem

When we discuss AI risks, the focus is often on technology. However, AI-related disasters don’t only occur because the technology fails, but also because of human actions. While AI is a coding challenge, it is equally a social, ethical, and governance challenge.

Who gets blamed when AI fails? The model? The developers? The company? No one or everyone?

AI learns from data. If the data is biased, unfair, or incomplete, the AI will adopt and replicate it. For example, Amazon’s hiring tool that started downgrading resumes with the word “women” or predictive policing tools that identified black defendants as high risk. This didn’t happen because the technology was faulty, but because the data used was flawed.

It is the people who decide:

- What the AI will do

- What rules to follow

- If it can be paused or challenged

If these decisions aren’t ethical, transparent, or safe, then it’s not the machine failing; it’s the human.

Another major ethical problem is the lack of clear accountability. In most cases, when an AI causes harm, no one is held accountable. Companies blame the model, teams say it’s too complex, and the people affected are left without answers.

AI doesn’t decide to be biased or dangerous. Humans build, train, and deploy it. So if it goes wrong, it’s not the machine’s fault, it’s ours. And unless we start taking responsibility now, we are heading towards a robot rebellion programmed by ourselves.

That brings us to the final part of this journey.

Conclusion

The same movie, which was a blockbuster in 2004 with cool CGI, 20 years later, feels more like a warning. The movie wasn’t just about killer robots. It was about what happens when we create powerful systems without understanding them, watching them, managing them, and governing them. And that’s exactly what we saw through the NIST AI Risk Management Framework.

In the movie, it took a moral robot and a brave cop to stop an AI-led rebellion. But in real life, we might not get that chance.

If we are building intelligent systems, we need to be smarter than they are in how we design, deploy, and control them, because AI isn’t just a tech problem, it’s a human responsibility.

So, what do you think? Can we prevent the future from looking like I, Robot?

I can show you how deep the Internet really goes

Discover exposed assets, infrastructure links, and threat surfaces across the global Internet.

Related Posts

July 7, 2025

DNS Cache Poisoning – Is It Still Relevant?

June 25, 2025

Modern Cybercrime: Who’s Behind It and Who’s Stopping It

June 20, 2025

AI-Driven Attack Surface Discovery

June 8, 2025

ASN Lookup Explained: Tools, Methods & Insights

June 11, 2025

FAQ: Understanding Root DNS Servers and the Root Zone

July 20, 2025

An Expert’s View on DNSSEC: Pros, Cons, and When to Implement